NotebookLLM from Google promises to revolutionize note taking. I want to up my note-taking game, so I was intrigued. Now I’m just disappointed.

Steven Johnson (author of Extra Life, Where Good Ideas Come From, and many more) is both its chief evangelist and lead guinea pig: https://stevenberlinjohnson.com/writing-at-the-speed-of-thought-21dfb7f689e4. Based on his story, I wanted to love this tool. After all, he’s a bestselling author.

Content warning: I have not and will not use an LLM to do my writing. If you read my work, I wrote it. I do use LLMs to critique my writing, fix grammar, etc. However, the writing remains mine. Occasionally, I will do a test to see what the current crop of LLMs is capable of.

Tiago Forte, well known in the note-taking/PKM world, produced a video (https://www.youtube.com/watch?v=iWPjBwXy_Io) describing why he liked it. I chose his because he always puts depth and effort into his work.

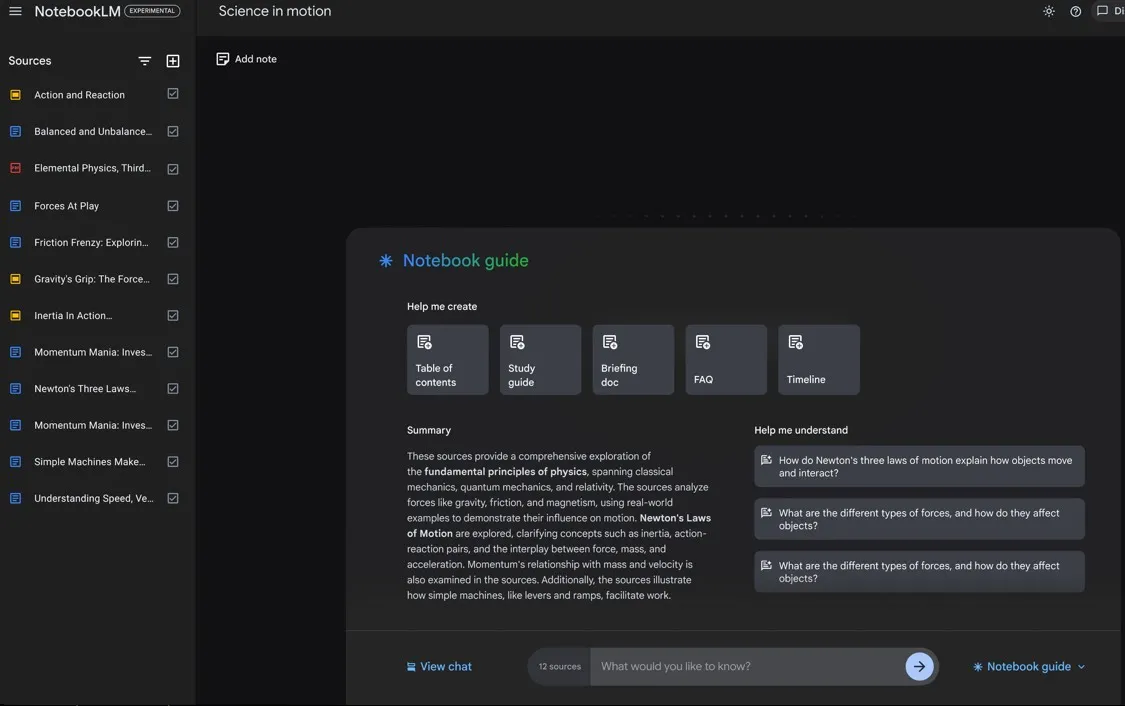

He gives three main uses for NotebookLLM: Upload a bunch of documents to the LLM and then ask questions about the dataset. He spins this as unique to Google. Really, what it’s doing under the hood is putting these documents at the front of your context window with the LLM. Think of a context window as the tool’s memory of a conversation with you. He says this is unique to Google; however, I tried it before with a ChatGPT frontend called Elephas (https://elephas.app). They call this your SuperBrain. I fed it a bunch of articles I had written, and it answered basic questions perfectly well, but it didn’t help me do anything useful. For example, I wanted to write a new article in an area I hadn’t covered before. I got Elephas/ChatGPT to generate an outline and then article content. The result wasn’t very good. The tone of the writing wasn’t anything like mine, and the factual content of the articles was wrong in enough places that it wasn’t interesting to me. Tiago demonstrated one useful function with NotebookLLM: it provided citations for each claim. I have not tested its accuracy myself. Having seen similar examples with other tools, I’ve noticed you still need to check each citation for accuracy, so I’m not convinced this is a big win. Sidebar: Tiago gets the LLM to write a business proposal for him and then outline a workshop he might run. Okay, no way am I taking a workshop from him now. If he can offload the training design to a tool, then he isn’t really thinking through how the learner will learn. When we design courses, it should be for the humans and another LLM.

Next, he uses the tool to help with rewrites. He gets it to edit a draft of an existing article, and it becomes shorter. Excellent; I’ve tried this too on occasion. Does he go through it word by word, ensuring that it has your voice? My own approach is the reverse of what he’s suggesting. I read what it spits out and rework it in my own writing when its suggestions are improvements. Finally, he mentions Readwise (an excellent reading app) to export and interrogate your reading highlights. I’m not sure how this is a different use from #1; it’s just exporting your data to the LLM.

My ideal LLM use case:

- Runs locally on my machine (privacy and awareness of my energy use).

- Integrates directly into my tooling (DevonThink - research archive and Obsidian - note-taking).

- Helps me spot connections between notes and ideas I hadn’t considered.

- Helps me test my ideas for weaknesses by showing material in my own vault that disagrees with my claims.

- Acts as an editor, helping me make small improvements to my English and challenging me to clarify my writing.

I really want a tool like NotebookLLM to be useful. I want to believe that it is accurate and not hallucinating when I ask it something about my own sources. From what I can see, NotebookLLM is a lot of sizzle for very limited value.

#Influence #Ship30For30